Sunday, December 17, 2006

Using GUI Tools

Friday, December 15, 2006

Using Local Startup Scripts

The usual reason to use a local startup script is that you're adding a server that you don't want to run via a super server and that doesn't come with an appropriate SysV startup script. Because SysV startup scripts are closely tied to specific distributions, you might not have an appropriate SysV startup script if you obtained a server from a source other than your own distribution. For instance, if you're running Mandrake but install a server intended for SuSE, the SuSE SysV startup script may not work on your Mandrake system. You may also run into this problem if you obtain the server in source code form from the server's author; an original source such as this is unlikely to include customizations for specific Linux distributions. The code may compile and run just fine, but you'll need to start the server yourself.

Of course, it's possible to write your own SysV startup scripts for such servers. You can do this by modifying a working SysV startup script. You might try modifying the startup script for an equivalent tool (such as an earlier version of the server you're installing, if you've resorted to a third-party source because your distribution's official tool is out of date), or some randomly selected startup script. This process can be tricky, though, particularly if you're not familiar with your distribution's startup script format or with shell scripting in general. (SysV startup scripts are written in the bash shell scripting language.) If you run into problems, or if you're in a hurry and don't want to put forth the effort to create or modify a SysV startup script, you can start it in the local startup script.

You can modify the local startup script using your favorite text editor. To start a server, simply include lines in the file that are the same as the commands you'd type at a command prompt to launch the program. For instance, the following line starts a Telnet server:

/usr/sbin/in.telnetd

If the server doesn't start up in daemon mode by default (that is, if it doesn't run in the background, relinquishing control of your shell if you launch it directly), you should use an ampersand (&) at the end of the command line to tell the server to run in the background. Failure to do this will cause the execution of the startup script to halt at the call to the server. This may be acceptable if it's the last line in the script, but if you want to start additional servers, the subsequent servers won't start if you omit the ampersand.

You may, of course, do something more complex than launching a server using a single line. You could use the bash shell's conditional expressions to test that the server file exists, or launch it only under certain circumstances. These are the sorts of tasks that are usually performed in SysV startup scripts, though, so if you want to go to that sort of effort, you might prefer writing your own SysV startup script.

One important point to keep in mind is that different distributions' local startup scripts aren't exactly equivalent to one another. For instance, SuSE runs its boot.local script earlier in the boot process than Red Hat runs its rc.local. Therefore, SuSE's local startup script is more appropriate for bringing up interfaces or doing other early startup tasks, whereas Red Hat's script is better for launching servers that rely on an already-up network connection. If the tasks you want to perform in the startup script are very dependent upon the presence or absence of other servers, you may be forced to create a SysV startup script with a sequence number that's appropriate for the tasks you want to perform.

The usual reason for using a local startup script is to create a quick-and-dirty method of launching a server or running some other program. Once launched, the local startup script provides no easy way to shut down the server (as does the stop parameter to most SysV startup scripts); you'll have to use kill, killall, or a similar tool to stop the server, if you need to do so.

Thursday, December 14, 2006

Setting Access Control Features

- Host-based restrictions— The only_from and no_access options are conceptually very similar to the contents of the /etc/hosts.allow and /etc/hosts.deny files for TCP Wrappers, but xinetd sets these features in its main configuration file or server-specific configuration file. Specifically, only_from sets a list of computers that are explicitly allowed access (with all others denied access), whereas no_access specifies computers that are to be blacklisted. If both are set for an address, the one with a more specific rule takes precedence. For both options, addresses may be specified by IP address (for instance, 172.23.45.67), by network address using a trailing .0 (for instance, 172.23.0.0 for 172.23.0.0/16) or with an explicit netmask (as in 127.23.0.0/16), a network name listed in /etc/networks, or a hostname (such as badguy.threeroomco.com). If a hostname is used, xinetd does a single lookup on that hostname at the time the server starts, so if the hostname-to-IP address mapping changes, a hostname may not be very effective.

- Temporal restrictions— You can specify a range of times during which the server is available by using the access_times option. This option requires an access time specified as hour:minute-hour:minute, such as 08:00-18:00, which restricts access to the server to the hours of 8:00 AM to 6:00 PM. Times are specified in a 24-hour format. Note that this restricts only the initial access to the server. For instance, if the Telnet server is restricted to 8:00 AM to 6:00 PM, somebody could log in at 5:58 PM and stay on indefinitely.

- Interface restrictions— You can bind a server to just one network interface using the bind or interface options (they're synonyms), which take the IP address associated with an interface. For instance, if eth1 is linked to 172.19.28.37, bind = 172.19.28.37 links a server only to eth1. Any attempt to access the server from eth0 is met with silence, as if the server weren't running at all. This feature is most useful on routers and other computers linked to more than one network, but it's also of interest to those with small internal networks and dial-up links. For instance, you can bind servers like Telnet or FTP to your local Ethernet interface, and they won't be available to the Internet at large via your PPP connection.

Wednesday, December 13, 2006

The /etc/xinetd.conf File Format

Whether it's located in /etc/xinetd.conf or a file in /etc/xinetd.d, a xinetd server definition spans several lines; however, a basic definition includes the same information as an inetd.conf entry. For instance, the following xinetd definition is mostly equivalent to the inetd.conf entry presented earlier for a Telnet server:

service telnet

{

socket_type = stream

protocol = tcp

wait = no

user = root

server = /usr/sbin/in.telnetd

}

This entry provides the same information as an inetd.conf entry. The xinetd configuration file format, however, explicitly labels each entry and splits them across multiple lines. Although this example presents data in the same order as does the inetd configuration, this order isn't required. Also, the xinetd definition doesn't call TCP Wrappers, although it could (you'd list /usr/sbin/tcpd on the server line, then add a server_args line that would list /usr/sbin/in.telnetd to pass the name of the Telnet server to TCP Wrappers).

In addition to the standard inetd features, xinetd provides many configuration options to expand its capabilities. Most of these are items that appear on their own lines between the curly braces in the service definition. The most important of these options include the following:

- Security features— As noted earlier, xinetd provides numerous security options, many of which are roughly equivalent to those provided by TCP Wrappers. These are discussed in greater depth in the upcoming section "Setting Access Control Features."

- Disabling a server— You can disable an inetd server by commenting out its configuration line. You can accomplish the same goal by adding the disable = yes line to a xinetd server definition. The same effect can be achieved in the main /etc/xinetd.conf file by using the disabled = server_list option in the defaults section, where server_list is a space-delimited list of server names. Various configuration tools use one of these methods to disable servers, and in fact a disable = no line may be present for servers that are active.

- Redirection— If you want to pass a request to another computer, you can use the redirect = target option, where target is the hostname or IP address of the computer that should receive the request. For instance, if you include the redirect = 192.168.3.78 line in the /etc/xinetd.d/telnet file of dummy.threeroomco.com, attempts to access the Telnet server on dummy.threeroomco.com will be redirected to the internal computer on 192.168.3.78. You might want to use this feature on a NAT router to allow an internal computer to function as a server for the outside world. The iptables utility can accomplish the same goal at a somewhat lower level, but doing it in xinetd allows you to apply xinetd's access control features.

- Logging— You can fine-tune xinetd's logging of access attempts using the log_on_success and log_on_failure options, which determine what information xinetd logs on successful and unsuccessful attempts to access a server. These options take values such as PID (the server's process ID, or PID), HOST (the client's address), USERID (the user ID on the client system associated with the access attempt), EXIT (the time and exit status of the access termination), and DURATION (how long the session lasted). When setting these values, you can use a += or -= symbol, rather than =, to add or subtract the features you want to log from the default.

- Connection load limits— You can limit the number of connections that xinetd will handle in several ways. One is the per_source option, which specifies how many connections xinetd will accept from any given source at any one time. (UNLIMITED sets xinetd to accept an unlimited number of connections.) The instances option specifies the maximum number of processes xinetd will spawn (this value may be larger than the per_source value). The cps option takes two space- separated values: the number of connections xinetd accepts per second and the number of seconds to pause after this limit is reached before enabling access again. You can adjust the scheduling priority of the servers that xinetd runs using the nice option, which sets a value in much the same way as the nice program. Finally, max_load takes a floating-point value that represents the system load average above which xinetd refuses further connections. Taken together, these options can reduce the chance that your system will experience difficulties because of certain types of denial of service (DoS) attacks or because of a spike in the popularity of your servers.

If you make changes to the /etc/xinetd.conf file or its included files in /etc/xinetd.d, you must restart the xinetd server program. Because xinetd itself is usually started through a SysV startup script, you can do this by typing a command such as /etc/rc.d/init.d/xinetd restart, although the startup script may be located somewhere else on some distributions. Alter natively, you can pass xinetd the SIGUSR1 or SIGUSR2 signals via kill. The former tells xinetd to reload its configuration file and begin responding as indicated in the new file. The latter does the same, but also terminates any servers that have been inactivated by changes to the configuration file.

Tuesday, December 12, 2006

Using xinetd

Monday, December 11, 2006

Using TCP Wrappers

TCP Wrappers is controlled through two files: /etc/hosts.allow and /etc/hosts.deny. These files have identical formats, but they have opposite actions—hosts.allow specifies computers that are to be allowed access to the computer, with all others denied access; hosts.deny specifies computers that are to be denied access, with all others allowed access. When a server is listed in both files, hosts.allow takes precedence. This allows you to set a restrictive policy in hosts.deny but override it to grant access to specific computers. For instance, you could disallow access to all computers in hosts.deny, then loosen that restriction in hosts.allow. If a server isn't specified in either file (either explicitly or through a wildcard, as discussed shortly), TCP Wrappers grants access to that server to all systems.

As with many other configuration files, a pound sign (#) at the start of a line indicates a comment. Other lines take the following form:

daemon-list : client-list

The daemon-list is a list of one or more servers to which the rule applies. If the list contains more than one server, commas or spaces may separate the server names. The names are those listed in /etc/services. The ALL wildcard is also accepted; if the daemon-list is ALL, then the rule applies to all servers controlled by TCP Wrappers.

The client-list is a list of computers that are to be allowed or denied. As with the daemon-list, the client-list can consist of just one entry or a list separated by commas or spaces. You can specify computers in any of several ways:

- IP addresses— You can list complete IP addresses, such as 10.102.201.23. Such an entry will match that IP address and that IP address only.

- IP address range— There are several ways to specify ranges of IP addresses. The simplest is to provide fewer than four complete bytes followed by a period. For instance, 10.102.201. matches the 10.102.201.0/24 network. You can also use an IP address/netmask pair, such as 10.102.201.0/24. IPv6 addresses are also supported, by a specification of the form [n:n:n:n:n:n:n:n]/len, where the n values are the IPv6 address and len is the length in bits of the range to be matched.

- Hostname— You can provide a complete hostname for the computer, such as badcracker.threeroomco.com. This will match that computer only. Because this method relies upon a hostname lookup, though, it's subject to problems if your DNS servers go down or if the person who controls the domain modifies its entries.

- Domain— You can match an entire domain or subdomain much as you can match a single hostname. The difference is that you must precede the domain name with a period, as in .threeroomco.com—this example matches all the computers in the threeroomco.com domain.

- NIS netgroup name— If a string is preceded by an at symbol (@), the string is treated as a Network Information Services (NIS) netgroup name. This method relies upon your network having a functioning NIS configuration.

In addition, the client-list specification supports more wildcards than does the daemon-list specification. Specific wildcards you may use include the following:

- ALL— This wildcard matches all computers.

- LOCAL— This wildcard is intended to match all local computers, based on hostname. If the computer's hostname lacks a period, it's considered local.

- UNKNOWN— This wildcard matches computers whose hostnames aren't known by your name resolution system.

- KNOWN— This wildcard matches computers whose hostname and IP addresses are both known to the system.

- PARANOID— This wildcard matches computers whose names and IP addresses don't match.

These last three options should be used with care, since they usually depend upon proper functioning of DNS, and DNS can be unreliable because of transient network problems. For instance, if a client's own DNS system is down or inaccessible, you might be unable to verify its hostname. As an example of a short but complete /etc/hosts.allow file, consider the following:

telnet,ftp : 192.168.34. dino.pangaea.edu

ssh : LOCAL .pangaea.edu

The first line specifies identical restrictions for the Telnet and FTP servers, allowing access only to the 192.168.34.0/24 network and the host called dino.pangaea.edu. The second line applies to SSH and restricts access to local computers and all those in the pangaea.edu domain. Because no other servers are listed in the daemon-list fields, TCP Wrappers doesn't block access to any other server. For instance, if you were to run Apache through inetd and TCP Wrappers, everybody would be granted access to Apache with this configuration.

In addition to matching entire computers, you can use the user@computer form to match individual users of the remote system. This form, however, requires that the client computer run an ident (aka auth) server, which returns the name of the user who is using a given network port. Your own server can query the client's ident server about the connection attempt, thus getting the username associated with that attempt. This may cause additional delays, however, and the information often isn't particularly trustworthy, especially from random hosts on the Internet. (You're more likely to want to use this feature to control access from specific users of systems that you control.)

The EXCEPT operator is another special keyword; it specifies exceptions to the rules just laid out. For instance, consider the following /etc/hosts.deny entry:

www : badcracker.org EXCEPT goodguy@exception.badcracker.org

This example denies access to the Web server to all the computers in the badcracker.org domain, unless the connection is coming from goodguy@exception.badcracker.org. (Because /etc/hosts.allow takes precedence over /etc/hosts.deny, entries in the former can also override those in the latter.)

If your goal is to run a very secure system, you may want to begin with the following /etc/hosts.deny file:

ALL : ALL

This blocks access to all computers for all servers handled by TCP Wrappers. You must then explicitly open access to other servers in /etc/hosts.allow. You should open access as little as possible. For instance, you might only give access to computers in a specific network block or domain for sensitive servers like Telnet. (Telnet passes all data in an unencrypted form, so it's a poor choice for logins over the Internet as a whole. See Chapter 13, Maintaining Remote Login Servers, for a discussion of this issue.)

Sunday, December 10, 2006

The /etc/inetd.conf File Format

telnet stream tcp nowait root /usr/sbin/tcpd in.telnetd

Each line consists of a series of fields, which are separated by spaces or tabs. The meaning of each field is as follows:

- Server name— The first field is the name of the server protocol, as recorded in /etc/services. In the case of the preceding example, the server protocol name is telnet, and if you check /etc/services, you'll see that this name is associated with 23/tcp—in other words, TCP port 23. There must be an entry in /etc/services in order for inetd to handle a server. For this reason, you may need to edit /etc/services if you want inetd to handle some unusual server. In most cases, though, /etc/services has the appropriate entries already.

- Socket type— The second field relates the network socket type used by the protocol. Possible values are stream, dgram, raw, rdm, and seqpacket.

- Protocol type— The third field is the name of the protocol type, which in this context means a low-level network stack protocol such as TCP or UDP. Possible protocol values appear in /etc/protocols, but the most common values are tcp and udp.

- Wait/Nowait— The fourth field takes just one of two values, wait or nowait, and is meaningful only for datagram (dgram) socket types (other socket types conventionally use a nowait value). Most datagram servers connect to a socket and free up inetd to handle subsequent connection attempts. These servers are called multi-threaded, and they require a nowait entry. Servers that connect to a socket, process all input, and then time out are said to be single-threaded, and they require wait entries. You may optionally add a number to these values, separated by a period, as in wait.60. This specifies the maximum number of servers of the given type that inetd may launch in one minute. The default value is 40.

- Username— You can tell inetd to launch a server with a specific user's privileges. This can be an important security feature, because restricting privileges for servers that don't require extensive access to the system can prevent a bug from causing a security breach. For instance, the Apache Web server usually doesn't need unusual privileges, so you could launch it as nobody or as some special account intended only for Apache. The preceding example shows the username as root because root privileges are needed to launch the login processes required by a Telnet server. If you add a period and a group name, the specified group name is used for the server's group privileges. For instance, nobody.nogroup launches a server with the username nobody and the group nogroup.

- Server program— The sixth field specifies the name of the server program that inetd launches when it detects an incoming connection. The preceding example gives /usr/sbin/tcpd as this program name. In reality, tcpd is not a server; it's the program file for the TCP Wrappers program, which is described shortly. Most Linux distributions that use inetd also use TCP Wrappers, and so launch most inetd-mediated servers through tcpd. You can bypass TCP Wrappers for any server you like, although it's generally best to use TCP Wrappers for reasons that are described shortly.

- Server program arguments— The final field is optional. When present, it contains any arguments that are to be passed to the server program. These arguments might modify the server's behavior, tell it where its configuration files are, and so on. In the case of servers launched through TCP Wrappers, the argument is the name of the ultimate target server, such as in.telnetd in the preceding example. (You may add the ultimate server's arguments to this list, if it needs any.)

You can edit /etc/inetd.conf using any text editor you like. Be sure that any new entries you create, or existing ones you modify, span a single line. (Long filenames, server arguments, and the like sometimes produce lines long enough that some editors will try to wrap the line onto two lines, which will cause problems.) If you need to add an entry for a server you've installed, consult the server's documentation to learn what its inetd.conf entry should look like. In some cases, you can model an entry after an existing one, but without knowing what values to enter for the server name, socket type, and so on, such an entry might not work.

Most distributions that use inetd ship with an /etc/inetd.conf file that contains entries for many different servers. Many of these entries are commented out, so they're inactive. You can activate a server by uncommenting such a line, if the matching server is installed. Some inetd.conf files include multiple entries for any given service; for instance, a file might have two or three different entries for different FTP servers. If yours is like this, be sure you uncomment only the line corresponding to the specific server you've installed, such as ProFTPd or WU-FTPD.

Thursday, December 7, 2006

Using inetd

The inetd program is one of two common super servers for Linux. These are servers that function as intermediaries; instead of running the target server itself, the computer runs the super server, which links itself to the ports used by all of the servers it needs to handle. Then, when a connection is made to one of those ports, the super server launches the target server and lets it handle the data transfer. This has two advantages over running a server directly. First, the memory load is reduced; a super server consumes little RAM compared to the servers it handles, particularly if it handles many servers. Second, the super server can filter incoming requests based on various criteria, which can improve your system's security. The drawback is that it takes time for the super server to launch the target server, so the time to connect to a server may be increased, although usually only by a second or two. As a general rule, it's good to use super servers for small or seldom-used servers, whereas big and frequently used servers should be run directly.

Wednesday, December 6, 2006

Setting and Changing the Runlevel

How does Linux know what runlevel to enter when it boots, though? That's set in the /etc/inittab file, which is the configuration file for init, which is the first process that Linux runs and the progenitor of all other processes. Specifically, /etc/inittab contains a line such as the following:

id:5:initdefault:

The leading id is the key to identifying this line, but the following number (5 in this example) is what you'll use to permanently set the runlevel. If you change this value and reboot, the computer will come up in a different runlevel. Runlevels 0, 1, and 6 have special meanings—they correspond to a system shutdown, a single-user mode, and a system reboot, respectively. Runlevels 2 through 5 are normal runlevels, but their precise meanings vary from one distribution to another. For Caldera, Red Hat, Mandrake, SuSE 7.3, and TurboLinux, runlevel 3 is a normal text-mode startup (X is not started), and runlevel 5 is a GUI login mode (X is started, and a GUI login program is run). Earlier versions of SuSE use runlevels 2 and 3 instead of 3 and 5, and Slackware uses 3 and 4 for text-mode and GUI logins. By default, Debian doesn't run any SysV scripts differently in different normal operational runlevels, although it runs fewer text-mode login tools in runlevels above 3 (this detail is handled by subsequent lines of /etc/inittab). Most distributions include a series of comments in their /etc/inittab files describing the function of each runlevel, so look for that if you need more information or are using a distribution I don't discuss in this book.

If you want to change the runlevel temporarily, you can do so with the telinit command (init also works on most systems). The syntax for this command is as follows:

telinit [-t seconds] [runlevel]

When you change runlevels, it's possible that certain processes will have to be killed. Linux can do this through either a SIGTERM or a SIGKILL signal. The former is a more polite way of doing the job; it lets the program close its own files and so on. SIGKILL, by contrast, unceremoniously kills the program, which can lead to file corruption. When you change runlevels, telinit first tries to use a SIGTERM. Five seconds later, if a process hasn't quit, telinit sends a SIGKILL. If specified, the -t seconds parameter changes this interval. In most cases, the default five seconds is adequate.

The runlevel specification is a single-character code for the runlevel. Most obviously, you can provide a runlevel number. There are certain other characters you can pass that have special meaning, however:

- a, b, or c— Some entries in /etc/inittab use runlevels of a, b, or c for special purposes. If you specify this option to telinit, the program only handles those /etc/inittab entries; the system's runlevel is not changed.

- Q or q— If you pass one of these values as the runlevel, telinit reexamines the /etc/inittab file and initiates any changes.

- S or s— This option causes the system to change into a single-user mode.

- U or u— This option causes the init process to restart itself, without reexamining the /etc/inittab file.

Why would you want to change the runlevel? Changing the default runlevel allows you to control which servers start up by default. On most distributions, the most important of these is the X server. This is usually started by a special line towards the end of /etc/inittab, not through a SysV startup script, although some use a SysV startup script for XDM to do the job. Changing the runlevel while the computer is running allows you to quickly enable or disable a set of servers, or change out of GUI mode if you want to temporarily shut down X when your system is configured to start it automatically.

Tuesday, December 5, 2006

Using Startup Script Utilities

Using chkconfig

The SysV startup script tool with the crudest user interface is chkconfig. This program is a non-interactive command—you must provide it with the information it needs to do its job on a single command line. The syntax for the command is as follows:

chkconfig <—list—add—del> [name]

chkconfig [—level levels] name [onoffreset]

The first format of this command is used to obtain information on the current configuration (using —list) or to add or delete links in the SysV link script directory (using —add or —del, respectively). The second format is used to enable or disable a script in some or all runlevels by changing the name of the SysV link, as described earlier. Some examples will help clarify the use of this command.

Suppose you want to know how Postfix is configured. If you know the Postfix startup script is called postfix, you could enter the following command to check its configuration:

# chkconfig —list postfix

postfix 0:off 1:off 2:on 3:on 4:on 5:on 6:off

This output shows the status of Postfix in each of the seven runlevels. You can verify this by using the find command described earlier. When chkconfig shows an on value, the startup link filename should start with S, and when chkconfig shows an off value, the startup link filename should start with K.

If you type chkconfig —list without specifying a particular startup script filename, chkconfig shows the status for all the startup scripts, and possibly for servers started through xinetd as well, if your system uses xinetd.

The —add and —del options add links if they don't exist or delete them if they do exist, respectively. Both these options require that you specify the name of the original startup script. For instance, chkconfig —del postfix removes all the Postfix SysV startup script links. The result is that Linux won't start the server through a SysV startup script, or attempt to change a server's status when changing runlevels. You might do this if you wanted to start a server through a super server or local configuration script. You might use the —add parameter to reverse this change.

You'll probably make most chkconfig changes with the on, off, or reset options. These enable a server, disable a server, or return the server to its default setting for a specified runlevel. (If you omit the —level option, the change applies to all runlevels.) For instance, suppose you want to disable Postfix in runlevel 3. You could do so by typing the following command:

# chkconfig —level 3 postfix off

This command won't return any output, so if you feel the need to verify that it's worked, you should use the —list option or look for a changed filename in the startup script link directory. You can enable a server by using on rather than off. If you want to affect more than one runlevel, you can do so by listing all the runlevels in a single string, as in 345 for runlevels 3, 4, and 5. If you've experimented with settings and want to return them to their defaults, you can use the reset option:

# chkconfig postfix reset

This command will reset the SysV startup script links for Postfix to their default values. You can reset the default for just one runlevel by including the —level levels option as the first parameter to the command

Although chkconfig is generally thought of as a way to manage SysV startup scripts, it can also work on xinetd configurations on many systems. If chkconfig is configured to treat, say, your FTP server as one that's launched via a super server, you can use it to manipulate this configuration much as if the FTP server were launched through SysV startup scripts. The difference is that the —level levels parameter won't work, and the —list option doesn't show runlevel information. Instead, any server started through a super server will run in those runlevels in which xinetd runs. Furthermore, the —add and —del options work just like on and off, respectively; the /etc/xinetd.d configuration files aren't deleted, only disabled, as described in the upcoming section, "Using xinetd."

When you change the SysV configuration with chkconfig, it does not automatically alter the servers that are running. For instance, if you reconfigure your system to disable sshd, that server will not be immediately shut down without manual intervention of some sort, such as running the SysV startup script with the stop option to stop the server.

Using ntsysv

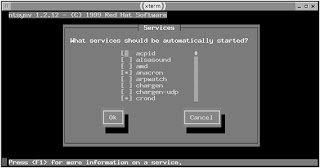

The ntsysv program provides a text-based menu environment for manipulating server startup. To run the program, type its name, optionally followed by —level levels, where levels is one or more runlevels you want to modify. If you omit this parameter, ntsysv modifies the configuration only for the current runlevel. Either way, the result should resemble Figure.

Figure The ntsysv program provides an easy-to-use interface for SysV configuration.

Figure The ntsysv program provides an easy-to-use interface for SysV configuration.The ntsysv display shows all the servers that have SysV startup scripts. Some versions also display servers started through xinetd. To enable or disable a server, use the arrow keys on your keyboard to move the cursor to the server you want to adjust, then press the spacebar to toggle the server on or off. An asterisk (*) in the box to the left of the server name indicates that the server is active; an empty box indicates that the server is disabled. When you're done making changes, use the Tab key to highlight the OK button, then press the Enter key to exit from the program and save your changes.

As with chkconfig, you can't adjust the specific runlevels in which servers mediated by a super server are run, except by changing the runlevels for the super server itself. Also, disabling a server won't cause it to shut down immediately; you must do that by manually shutting the server down, as described earlier, or by restarting the super server, if your target server is handled by the super server.

Monday, December 4, 2006

Manually Enabling or Disabling Startup Scripts

A more elegant solution is to rename the link to the startup script in the SysV link directory that corresponds to your current runlevel. For instance, to disable a server, rename it so that it starts with K rather than S; to enable it, reverse this process. The difficulty with this procedure is that the sequence number isn't likely to be the same when killing the server as when starting it. One way around this potential pitfall is to locate all the links to the script in all the runlevels. If any of the other runlevels sport the configuration you want, you've found the sequence number. For instance, the following command finds all the links for the Postfix mail server startup scripts on a Mandrake system:

$ find /etc/rc.d -name "*postfix"

/etc/rc.d/rc0.d/K30postfix

/etc/rc.d/rc1.d/K30postfix

/etc/rc.d/rc2.d/S80postfix

/etc/rc.d/rc3.d/S80postfix

/etc/rc.d/rc4.d/S80postfix

/etc/rc.d/rc5.d/S80postfix

/etc/rc.d/rc6.d/K30postfix

/etc/rc.d/init.d/postfix

This sequence shows that Postfix is started with a sequence number of 80 in runlevels 2 through 5, and shut down with a sequence number of 30 in runlevels 0, 1, and 6. If you wanted to disable Postfix in runlevel 3, you could do so by renaming S80postfix to K30postfix in that runlevel's directory.

If you want to temporarily start or stop a server, or if you want to initiate a change immediately without restarting the computer or adjusting the runlevel, you can do so by running the startup script along with the start or stop parameters. For instance, to stop Postfix immediately, you could type the following command on a Mandrake system:

# /etc/rc.d/init.d/postfix stop

Most Linux distributions display a message reporting the attempt to shut down the server, and the success of the operation. When starting a SysV script, a message about the startup success appears. (In fact, you may see these messages scroll across your screen after the kernel startup messages when you boot your computer.)

In the case of Slackware, instead of renaming, adding, or deleting startup scripts or their links, you should edit the single startup script file for the runlevel in question. For instance, if you want to change the behavior of runlevel 4, you'd edit /etc/rc.d/rc.4. Most servers, though, aren't started in these runlevel-specific scripts; they're started in /etc/rc.d/rc.inet2, with very basic network tools started in /etc/rc.d/rc.inet1. To change your configuration, you'll have to manually modify these scripts as if they were local startup scripts, as described in the section "Using Local Startup Scripts."

Sunday, December 3, 2006

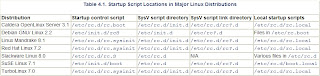

Startup Script Locations and Naming Conventions

Several distributions (notably Red Hat, Mandrake, TurboLinux, and to a lesser extent Caldera) are quite similar to each other, particularly in their placement of SysV scripts (/etc/rc.d/init.d) and the links to those scripts (/etc/rc.d/rc?.d). Others place the scripts in slightly different locations. Slackware is the most unusual in this respect. Rather than running individual scripts in a directory named after a runlevel, Slackware uses a single script for each runlevel. For instance, the /etc/rc.d/rc.4 script controls the startup of runlevel 4.

For most Linux distributions (Slackware being the major exception), the links in the SysV startup script link directories are named in a very particular way. Specifically, the filename takes the form C##name, where C is a character (S or K), ## is a two-digit number, and name is a name that's traditionally the same as the corresponding script in the SysV script directory. For instance, the link filename might be S10network or K20nfs—filenames that correspond to the network and nfs scripts, respectively. As you might guess, this naming scheme isn't random. The name portion of the link filename helps you determine what the script does; it's usually named after the original script. The leading character indicates whether the computer will start or kill (for S and K, respectively) the script upon entering the specified runlevel. Thus, S10network indicates that the system will start whatever the network script starts (basic networking features, in fact), and K20nfs shuts down whatever the nfs script controls (the NFS server, in reality). The numbers indicate the sequence in which these actions are to be performed. Thus, S10network starts networking before S55sshd starts the secure shell (SSH) server. Similar rules apply for shutdown (K) links.

The names and numbers of the startup and shutdown links vary from one distribution to another. For instance, Mandrake uses S10network to start its basic networking features, but Debian uses S35networking for this function. Similar differences may exist for the scripts that launch specific servers. What's important is that all the necessary servers and processes are started in the correct order. Many networking tools must be started after basic networking features are started, for instance. It's generally not wise to change the order in which startup and shutdown scripts execute, unless you understand this sequence and the consequences of your changes.

One additional wrinkle requires mention: SuSE uses the /etc/rc.config file to control the SysV startup process. This file contains sections pertaining to major servers that can be started via the SysV process, and if a server is not listed for startup (via a line of the form START_SERVERNAME="yes"), SuSE doesn't start that server, even if its link name begins with S. Caldera uses a similar scheme for a few servers, but uses files in /etc/sysconfig/daemons named after the servers in question. The ONBOOT line in each of these files determines whether the system starts the server. Many startup scripts ignore this option in Caldera, though.

Saturday, December 2, 2006

Using SysV Startup Scripts

The SysV startup scheme is closely tied to the runlevel of the computer. Each runlevel is associated with a specific set of startup scripts, and hence with a specific set of services that the system runs. (SysV scripts can start more than just network servers; they can start system loggers, filesystem handlers, and so on.) Thus, configuring servers to start via SysV scripts is closely related to configuring these runlevels. This is done by altering the names of links to the startup scripts, as stored in directories associated with each runlevel.

Starting Servers

Fortunately, in many cases Linux configures things so that a server starts automatically after it's installed, or at least once you reboot the computer after installing the server. There are three major methods you can use to start a server on a regular basis: via System V (SysV) startup scripts; via a super server, such as inetd or xinetd; or via a local startup script. You can always configure any of these methods by manually editing the appropriate configuration files or scripts. With most distributions, you can also accomplish the task through the use of GUI tools. This chapter covers all these methods of starting servers. Subsequent chapters refer back to this one to convey how a specific server is most commonly started.

Friday, December 1, 2006

Using Linux NetBEUI Software

Whichever way you do it, you'll need the source code to both the Linux kernel and Samba. You can obtain these from http://www.kernel.org and http://www.samba.org, respectively, or from many common Linux download sites, like ftp://sunsite.unc.edu. Both packages can take several minutes to compile and install, so even if you don't run into problems, installing NetBEUI support is likely to take several minutes.

Once you've installed NetBEUI support, you can use several commands to enable or manipulate this support. These commands, included with the NetBEUI stack, are as follows:

- netb— Type this command followed by start to start NetBEUI on Linux. To stop NetBEUI, type netb stop. You must use this utility before you can use NetBEUI on Linux.

- nbview— Use this command if you want to check on the status of the local NetBEUI stack. This command reads the /proc/sys/netbeui file, which contains this information, and formats and parses the information for easier human consumption.

- nbstatus— This command displays information on the specified machine or workgroup; for instance, nbstatus SERVER displays information on the computer called SERVER.

- nbadmin— This command allows you to bind NetBEUI to a specific network interface, unbind NetBEUI from a specific interface, or drop a specific NetBEUI session. It does this using the bind, unbind, and drop commands, respectively, as in nbadmin bind eth0 or nbadmin drop 102. (You can obtain NetBEUI session numbers from nbview.)

For the most part, you'll only need to issue a netb start command, then start Samba. The NetBEUI stack adds a parameter to nmbd (the NetBIOS name daemon), smbd (the SMB daemon), and smbclient (the text-mode Samba client) to specify whether to use TCP/IP or NetBEUI. This parameter is -Z

To sum up, you can turn Linux into a NetBEUI SMB/CIFS server called NAME by recompiling the kernel and Samba with the Procom NetBEUI stack, rebooting, shutting down Samba (if necessary), and typing the following commands

# netb start

# nmbd -Z NETBEUI

# smbd -Z NETBEUI -S NAME

You can place these commands in a startup script, or modify your regular Samba startup script to incorporate these changes. Other Samba features, such as the definitions of shares, all function as described in Chapter 7. The advantage of this procedure boils down to two factors. First, it can be used with some older clients that support NetBEUI but not TCP/IP, and it reduces the chance of malicious outside access to the computer via Samba, because NetBEUI isn't normally routed over the Internet. On the downside, NetBEUI support is duplicated in NetBIOS over TCP/IP, which works with all recent Linux kernels and versions of Samba, and doesn't require patching or recompiling the kernel or Samba.

Thursday, November 30, 2006

Obtaining a NetBEUI Stack for Linux

In addition to patching the Linux kernel and Samba, the NetBEUI stack comes with a number of tools that let you configure and manipulate it. This configuration tends to be fairly simple, and in most cases you'll use new Samba options to control the computer's NetBEUI behavior, but the separate utilities can be useful for troubleshooting and for learning more about NetBEUI. One utility in particular, netb, is also required to start up the NetBIOS stack, as described shortly.

NetBEUI

NetBEUI Features and Capabilities

Like AppleTalk and IPX, NetBEUI was designed with small networks in mind. In fact, NetBEUI is even more limited than its competing small-network protocols, because it's restricted to networks of just 256 computers. NetBEUI uses computer names similar to TCP/IP hostnames, but there is no underlying numeric addressing system, as is true of TCP/IP, AppleTalk, and IPX; NetBEUI uses the computer's name directly. These names are two-tiered in nature, much like AppleTalk's names (which include a computer name and a network zone name). NetBEUI calls its higher-order groupings workgroups or domains, depending upon whether a centralized computer exists to control logins (domains support this feature, but workgroups leave authentication to individual servers). When it starts up and periodically thereafter, a computer configured to use NetBEUI makes a broadcast to announce its presence.

NetBEUI can theoretically be used over just about any network medium, but it's most commonly used over Ethernet. Like AppleTalk and IPX, NetBEUI can coexist on Ethernet with TCP/IP or other network stacks.

NetBEUI is most frequently used in conjunction with the SMB/CIFS file- and printer-sharing protocols. These are comparable in scope to NFS/lpd for Unix and Linux, AppleTalk's equivalent protocols, or NCP. They can also be used over TCP/IP, and in fact this configuration is very common, even on networks that contain Windows computers exclusively. Because it's not easily routed, however, NetBEUI offers some security benefits—a distant attacker is unlikely to be able to launch a successful attack against a NetBEUI server.

Tuesday, November 28, 2006

Using Linux IPX/SPX Software

- Kernel NCPFS support— The Linux kernel includes support for the NCP filesystem in the Network File Systems submenu of the File Systems menu. This support allows a Linux computer to mount NetWare file shares. You need the ncpmount program, which usually ships in a package called ncpfs, to accomplish this task.

- LinWare— This package provides limited NCP server support. At the time of this writing, however, the current version is 0.95 beta, which was designed for the 1.3.x kernels—in other words, the software hasn't been updated since 1996. This might change in the future, though. The package is housed under the name lwared at ftp://sunsite.unc.edu/pub/Linux/system/network/daemons/.

- Mars_nwe— This is another NetWare server package for Linux. This package is hosted at http://www.compu-art.de/mars_nwe/, which is largely in German. English documentation is in the Mars_nwe HOWTO document, http://www.redhat.com/support/docs/tips/Netware/netware.html. Mars_nwe supports both file and print services. Its primary configuration file is /etc/nwserv.conf or /etc/nwserv/nwserv.conf, and it can be started by typing nwserv, if it's not started automatically by a startup script.

All of these IPX/SPX packages require you to have IPX support compiled into your kernel, as described in Chapter 1. Some distributions also require a separate package, usually called ipxutils, which contains utilities for activating and controlling the IPX/SPX network stack. (Alternatively, some distributions include these tools in the ncpfs package.)

If you intend to run a server for NetWare clients, Mars_nwe configuration is usually not too difficult, because the default configuration works fairly well. The configuration file is also usually very well commented, so you can learn how to configure it by reading its comments. You should pay particular attention to a few details:

- Section 1 of the file defines the volumes that are to be shared. In Linux terms, these are directories. The configuration file that ships with your copy of the server may or may not define volumes that you'd want to share.

- Section 7 controls password encryption options. You may need to enable nonencrypted passwords if your network doesn't have a regular NetWare bindery server—a computer that handles authentication.

- Section 13 defines users who are to be allowed access to the server. You may need to add usernames and passwords to this section, duplicating the regular Linux username and password configuration. Passwords are stored in an unencrypted form, which is a potential security flaw. If the network has a bindery, though, you can remove these entries after starting Mars_nwe for the first time, so the security risk may not be as bad as it first appears. Instead of dealing with individual accounts, you can set up Section 15 to do this automatically, but the drawback is that all the accounts will have the same password.

The Mars_nwe package includes the means to automatically enable IPX support on your network interface. This convenience doesn't exist in the case of Linux's NetWare client support as implemented in ncpmount, however. Before you can use this command, you should enable auto-configuration with the ipx_configure command. You can then mount a NetWare volume with ncpmount. The entire procedure might resemble the following:

# ipx_configure —auto_interface=on —auto_primary=on

# ncpmount -S NW_SERV -U anne -P p4rtu3a /mnt/nwmount

This sequence enables auto-detection of the local network number and mounts volumes stored on NW_SERV associated with the user anne at /mnt/nwmount, using the password p4rtu3a

IPX/SPX Features and Capabilities

As you might guess from the name and addressing scheme, IPX/SPX is designed for internetworking—that is, linking networks together. This is accomplished via IPX routers, which work much like TCP/IP routers in broad detail. (In fact, a single system can function as both a TCP/IP and an IPX router.) A simple network may not require a router, but IPX/SPX does support the option.

IPX/SPX servers use a protocol known as the Service Advertisement Protocol (SAP) to periodically announce their names and the services they make available, such as shared directories or printers. Other systems on the local network segment will "hear" these announcements, and IPX routers should echo them to other network segments. This design can help make locating the right server easy, but it can also increase network traffic as the network size increases; with more servers, there will be more SAP broadcasts consuming network bandwidth.

Monday, November 27, 2006

The Role of the TCP/IP Stack

On an individual Linux computer, TCP/IP is a good choice because of its support for so many important network protocols. Although many non-Unix platforms developed proprietary protocol stacks in the 1980s, Unix systems helped pioneer TCP/IP, and most Unix network protocols use TCP/IP. Linux has inherited these protocols, and so a Linux-only or Linux/Unix network operates quite well using TCP/IP alone; chances are you won't need to use any other network stack in such an environment.

Common protocols supported by TCP/IP include HTTP, FTP, the Simple Mail Transfer Protocol (SMTP), the Network File System (NFS), Telnet, Secure Shell (SSH), the Network News Transfer Protocol (NNTP), the X Window System, and many others. Because of TCP/IP's popularity, tools that were originally written for other network stacks can also often use TCP/IP. In particular, the Server Message Block (SMB)/Common Internet File System (CIFS) file-sharing protocols used by Windows can link to TCP/IP via the Network Basic Input/Output System (NetBIOS), rather than tying to the NetBIOS Extended User Interface (NetBEUI), which is the native DOS and Windows protocol stack. (All versions of Windows since Windows 95 also support TCP/IP.) Similarly, Apple's file-sharing protocols now operate over TCP/IP as well as over AppleTalk.

Although it's very popular and can fill many network tasks, TCP/IP isn't completely adequate for all networking roles. For instance, your network might contain some systems that continue to use old OSs that don't fully support TCP/IP. An old Macintosh might only support file sharing over AppleTalk, for instance, or you might have DOS or Windows systems configured to use IPX or NetBEUI. In these situations, Linux's support for alternative network stacks can be a great boon.

Wrapping and Unwrapping Data

The OSI Network Stack Model

Sunday, November 26, 2006

Configuring a Static IP Address

Configuring Network Interfaces

Loading a driver, as described earlier in this chapter, is the first step in making a network interface available. To use the interface, you must assign it an IP address and associated information, such as its network mask (also called the subnet mask or netmask). This job is handled by the ifconfig utility, which displays information on an interface or changes its configuration, depending upon how it's called.

Basic ifconfig Syntax and Use

The ifconfig utility's syntax is deceptively simple:

ifconfig [interface] [options]

The program behaves differently depending upon what parameters it's given. On a broad level, ifconfig can do several different things:

- If used without any parameters, ifconfig returns the status of all currently active network interfaces. Used in this way, ifconfig is a helpful diagnostic tool.

- If given a single interface name (such as eth0 or tr1), ifconfig returns information on that interface only. Again, this is a useful diagnostic tool.

- If fed options in addition to an interface name, ifconfig modifies the interface's operation according to the options' specifications. Most commonly, this means activating or deactivating an interface.

Saturday, November 25, 2006

Using a DHCP Client

Most Linux distributions give you the option of using DHCP during installation. You should be able to select a DHCP option when configuring the network settings. If not, or if you need to reconfigure the computer after installation, the easiest way to enable this feature is usually to use a GUI configuration tool, such as Linuxconf (Red Hat or Mandrake), COAS (Caldera), or YaST or YaST2 (SuSE). For instance, Figure 2.1 shows the YaST2 configuration screen in which this option is set. Click Automatic Address Setup (via DHCP), and the system will obtain its IP address via DHCP.

Unfortunately, DHCP configuration isn't always quite this easy. Potential problems include the following:

- Incompatible DHCP clients— Four DHCP clients are common on Linux systems: pump, dhclient, dhcpxd, and dhcpcd (don't confuse either of the latter two with dhcpd, the DHCP server). Although all four work properly on many networks, some networks use DHCP servers that don't get along well with one or another Linux DHCP clients. You might therefore need to replace your DHCP client package with another one.

- Incompatible DHCP options— DHCP client options sometimes cause problems. In practice, this situation can be difficult to distinguish from an incompatible DHCP client, but the solution is less radical: You can edit the DHCP startup script to change its options. Unfortunately, you'll need to learn enough about your DHCP client to have some idea of what options to edit. Reading the man page may give you some ideas.

- Multi-NIC configurations— If your computer has two or more network interface cards (NICs), you may need to get the DHCP client to obtain an IP address for only some cards, or to disregard some information (such as the gateway address, described in more detail shortly in "Adjusting the Routing Table") for some NICs. Again, editing the DHCP client startup script may be necessary, or you may need to create a custom script to correct an automatic configuration after the fact.

Friday, November 24, 2006

Loading Network Drivers

append="ether=0,0,0x240,eth0"

You can include several options within the quotes after append= by separating them with spaces. You can use this trick to force a particular Ethernet card to link itself to a particular network card on a multi-interface system by identifying the I/O port used by a specific card. In most cases, you won't need to pass any options to a driver built into the kernel; the driver should detect the network card and make it available without further intervention, although you must still activate it in some other way.

If your network card drivers are compiled as modules, you can pass them parameters via the /etc/modules.conf file (which is called /etc/conf.modules on some older distributions). For instance, this file might contain lines like the following:

alias eth0 ne

options ne io=0x240

This pair of lines tells the system to use the ne driver module for eth0, and to look for the board on I/O port 0x240. In most cases, such configurations will be unnecessary, but you may need to make the link explicit in some situations. This is especially true if your system has multiple network interfaces. The GUI configuration tools provided by many distributions can help automate this process; you need only select a particular model of card or driver from a list, and the GUI tools make the appropriate /etc/modules.conf entries.

When you've created an entry in /etc/modules.conf, Linux will attempt to load the network drivers automatically whenever it detects an attempt to start up a network interface. If you should need to do this manually for any reason, you may use the insmod command to do the job:

# insmod ne

This command will load the ne module, making it available for use. If kernel module auto-loading doesn't work reliably, you can force the issue by placing such a command in a startup script such as /etc/rc.d/rc.local or /etc/rc.d/boot.local.

If your connection uses PPP, SLIP, or some other software protocol for communicating over serial, parallel, or other traditionally nonnetwork ports, you must load the drivers for the port itself and for the software protocol in question. You do this in the same way you load drivers for a network card—by building the code into the kernel file proper or by placing it in a module and loading the module. In most cases, you'll need to attend to at least two drivers: one for the low-level hardware and another for the network protocol. Sometimes one or both of these will require additional drivers. For instance, a USB modem may require you to load two or three drivers to access the modem.

TCP/IP Network Configuration

Thursday, November 23, 2006

Installing and Using a New Kernel

Unfortunately, copying the kernel to /boot isn't enough to let you use the kernel. You must also modify your boot loader to boot the new kernel. Most Linux distributions use the Linux Loader (LILO) as a boot loader, and you configure LILO by editing /etc/lilo.conf. Listing 1.1 shows a short lilo.conf file that's configured to boot a single kernel.

Listing 1.1 A sample lilo.conf file

boot=/dev/sda

map=/boot/map

install=/boot/boot.b

prompt

default=linux

timeout=50

image=/boot/vmlinuz

label=linux

root=/dev/sda6

read-only

To modify LILO to allow you to choose from the old kernel and the new one, follow these steps:

- Load /etc/lilo.conf in a text editor.

- Duplicate the lines identifying the current default kernel. These lines begin with an image= line, and typically continue until another image= line, an other= line, or the end of the file. In the case of Listing 1.1, the lines in question are the final four lines.

- Modify the copied image= line to point to your new kernel file. You might change it from image=/boot/vmlinuz to image=/boot/bzImage-2. 4.17, for instance. (Many Linux distributions call their default kernels vmlinuz.)

- Change the label= line for the new kernel to something unique, such as mykernel or 2417, so that you can differentiate it at boot time from the old kernel. Ultimately, you'll select the new kernel from a menu or type its name at boot time.

- Save your changes.

- Type lilo to install a modified boot loader on your hard disk.

The next time you boot the computer, LILO should present you with the option of booting your old kernel or the new one, in addition to any other options it may have given you in the past. Depending upon how it's configured, you may see the new name you entered in Step 4 in a menu, or you may be able to type it at a lilo: prompt.

If you can boot the computer and are satisfied with the functioning of your new kernel, you can make it the default by modifying the default= line in /etc/lilo.conf. Change the text after the equal sign to specify the label you gave the new kernel in Step 4, then type lilo again at a command prompt.

There are ways to boot a Linux kernel other than LILO. Some distributions use the Grand Unified Boot Loader (GRUB) instead of LILO. Consult the GRUB documentation for details on how to configure it. You can also use the DOS program LOADLIN to boot Linux. To do this, you need to place a copy of the kernel on a DOS-accessible disk—a DOS partition or a floppy disk. You can then boot Linux by typing a command like the following:

C:> LOADLIN BZIMAGE root=/dev/sda6 ro

In this example, BZIMAGE is the name of the kernel file, as accessed from DOS, and /dev/sda6 is the Linux identifier for the root partition. The ro option specifies that the kernel should initially mount this partition read-only, which is standard practice. (Linux later remounts it for read-write access.) LOADLIN can be a useful tool for testing new kernels, if you prefer not to adjust your LILO setup initially. It's also a good way to boot Linux in an emergency, should LILO become corrupt. If you don't have a copy of DOS, FreeDOS (http://www.freedos.org) is an open source version that should do the job. LOADLIN ships on most Linux distributions' installation CD-ROMs, usually in a directory called dosutils or something similar.

Common Kernel Compilation Problems

- Buggy or incompatible code— Sometimes you'll find that drivers are buggy or incompatible with other features. This is most common when you try to use a development kernel or a non-standard driver you've added to the kernel. The usual symptom is a compilation that fails with one or more error messages. The usual solution is to upgrade the kernel, or at least the affected driver, or omit it entirely if you don't really need that feature.

- Unfulfilled dependencies— If a driver relies upon some other driver, the first driver should only be selectable after the second driver has been selected. Sometimes, though, the configuration scripts miss such a detail, so you can configure a system that won't work. The most common symptom is that the individual modules compile, but they won't combine into a complete kernel file. (If the driver is compiled as a module, it may return an error message when you try to load it.) With any luck, the error message will give you some clue about what you need to add to get a working kernel. Sometimes, typing make dep and then recompiling will fix the problem. Occasionally, compiling the feature as a module rather than directly into the kernel (or vice-versa) will overcome such problems.

- Old object files— If you compile a kernel, then change the configuration and compile again, the make utility that supervises the process should detect what files may be affected by your changes and recompile them. Sometimes this doesn't work correctly, though, with the result being an inability to build a complete kernel, or sometimes a failure when compiling individual files. Typing make clean should clear out preexisting object files (the compiled versions of individual source code files), thus fixing this problem.

- Compiler errors— The GNU C Compiler (GCC) is normally reliable, but there have been incidents in which it's caused problems. Red Hat 7.0 shipped with a version of GCC that could not compile a 2.2.x kernel, but this problem has been overcome with the 2.4.x kernel series. (Red Hat 7.0 actually shipped with two versions of GCC; to compile a kernel, you had to use the kgcc program rather than gcc.)

- Hardware problems— GCC stresses a computer's hardware more than many programs, so kernel compilations sometimes turn up hardware problems. These often manifest as signal 11 errors, so called because that's the error message returned by GCC. Defective or over heated CPUs and bad RAM are two common sources of these problems. http://www.bitwizard.nl/sig11/ has additional information on this problem.

If you can't resolve a kernel compilation problem yourself, try posting a query to a Linux newsgroup, such as comp.os.linux.misc. Be sure to include information on your distribution, the kernel version you're trying to compile, and any error messages you see. (You can omit all the nonerror compilation messages.)

A Typical Kernel Compilation

# make dep

# make bzImage

# make modules

# make modules_install

The first of these commands performs some housekeeping tasks. Specifically, dep is short for dependency—make dep determines which source code files depend on others, given the options you've chosen. If you omit this step, it's possible that the compilation will go awry because you've added or omitted features, which requires adjusting the dependency information for the features you have.

The second of these commands builds the main kernel file, which will then reside in the /usr/src/linux/arch/i386/boot directory under the name bzImage. There are variants on this command. For instance, for particularly small kernels, make zImage works as well (the bzImage form allows a boot loader like LILO to handle larger kernels than does the zImage form). Both zImage and bzImage are compressed forms of the kernel. This is the standard for x86 systems, but on some non-x86 platforms, you should use make vmlinux instead. This command creates an uncompressed kernel. (On non-x86 platforms, the directory in which the kernel is built will use a name other than i386, such as ppc for PowerPC systems.)

The make modules command, as you might guess, compiles the kernel modules. The make modules_install command copies these module files to appropriate locations in /lib/modules. Specifically, a subdirectory named after the kernel version number is created, and holds subdirectories for specific classes of drivers.

The entire kernel compilation process takes anywhere from a few minutes to several hours, depending on the options you select and the speed of your computer. Typically, building the main kernel file takes more time than does building modules, but this might not be the case if you're building an unusually large number of modules. At each step, you'll see many lines reflecting the compilation of individual source code files. Some of these compilations may show warning messages, which you can usually safely ignore. Error messages, on the other hand, are more serious, and will halt the compilation process.

Drivers: Modules or Built-In

A feature that's compiled into the kernel is always available, and is available early in the boot process. There's no chance that the feature will become unavailable because it's been unloaded. Some features must be compiled in this way, or not at all. For instance, the filesystem used for the root directory must be available in the kernel at boot time, and so must be compiled directly into the kernel. If you use the Root File System on NFS option, described earlier, you'll need to compile support for your network hardware into the kernel.

The down side to compiling a feature directly into the kernel is that it consumes RAM at all times. It also increases the size of the kernel on disk, which can complicate certain methods of booting Linux. For these reasons, Linux distributions ship most options that can be compiled as modules in this way. This allows the distribution maintainers to keep their default kernels manageable, while still providing support for a wide array of hardware. Because most network hardware can be compiled as modules, most distributions compile drivers for network cards in this way.

As somebody who's maintaining specific Linux computers, though, you might decide to do otherwise. If you compile a network card driver directly into the kernel, you won't need to configure the system to load the module before you attempt to start networking. (In truth, most distributions come preconfigured to handle this task correctly, so it's seldom a problem.) On the other hand, if you maintain a wide variety of Linux systems, you might want to create a single kernel and set of kernel modules that you can install on all the computers, in which case you might want to compile network drivers as modules.

Features such as network stacks can also be compiled as modules or directly into the kernel. (TCP/IP is an exception; it must be compiled directly into the kernel or not at all, although a few of its suboptions can be compiled as modules.) You might decide to compile, say, NFS client support as a module if the computer only occasionally mounts remote NFS exports. Doing this will save a small amount of RAM when NFS isn't being used, at the cost of greater opportunities for problems and a very small delay when mounting an NFS export.

As you might gather, there's no single correct answer to the question of whether to compile a feature or driver directly into the kernel or as a module, except of course when modular compilation isn't available. As a general rule of thumb, I recommend you consider how often the feature will be in use. If it will be used constantly, compile the feature as part of the kernel; if it will be used only part of the time, compile the feature as a module. You might want to favor modular compilation if your kernel becomes large enough that you can't boot it with LOADLIN (a DOS utility for booting Linux), though, because this can be an important way to boot Linux in some emergency situations.